背景介绍

电商平台中有海量的非结构化文本数据,如商品描述、用户评论、用户搜索词、用户咨询等。这些文本数据不仅反映了产品特性,也蕴含了用户的需求以及使用反馈。通过深度挖掘,可以精细化定位产品与服务的不足。

用户评论能反映出用户对商品、服务的关注点和不满意点。评论从情感分析上可以分为正面与负面。细粒度上也可以将负面评论按照业务环节进行分类,便于定位哪个环节需要不断优化。

数据

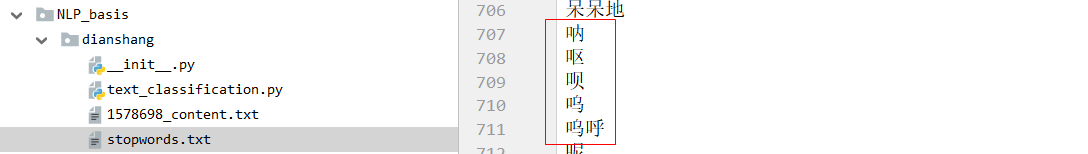

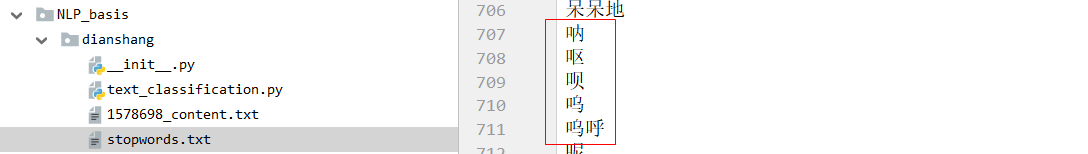

分词词典:

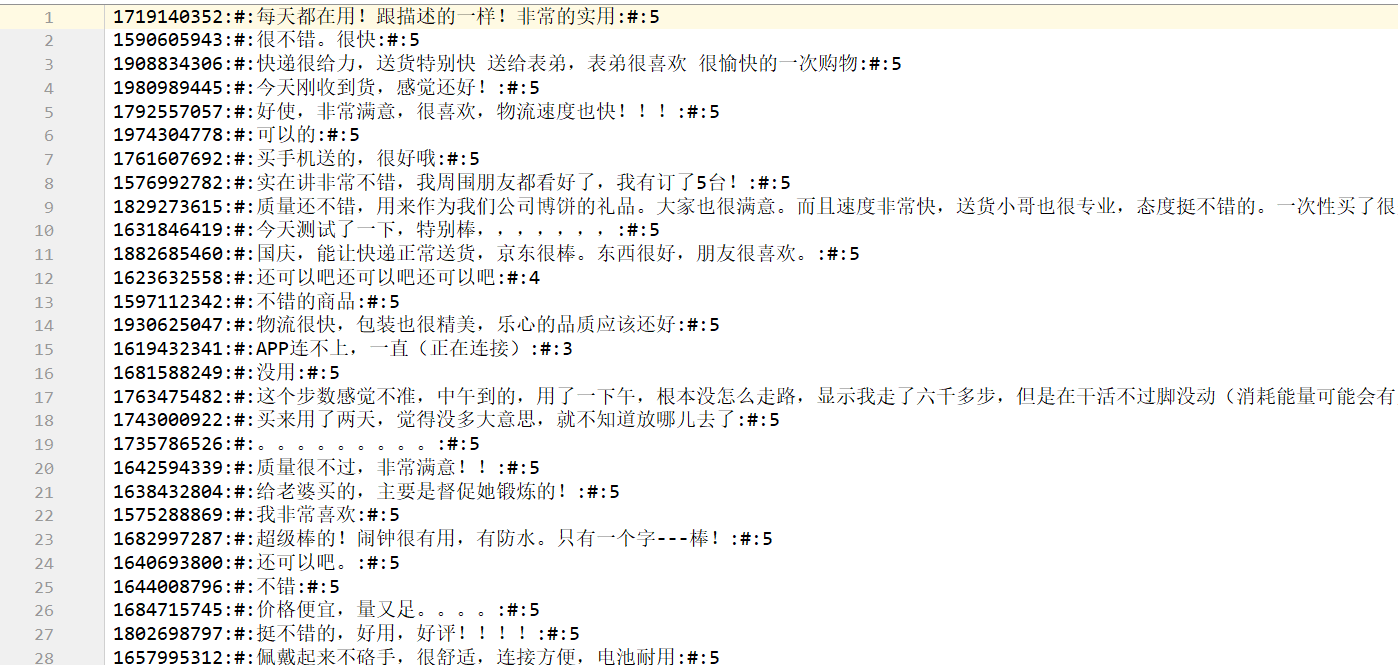

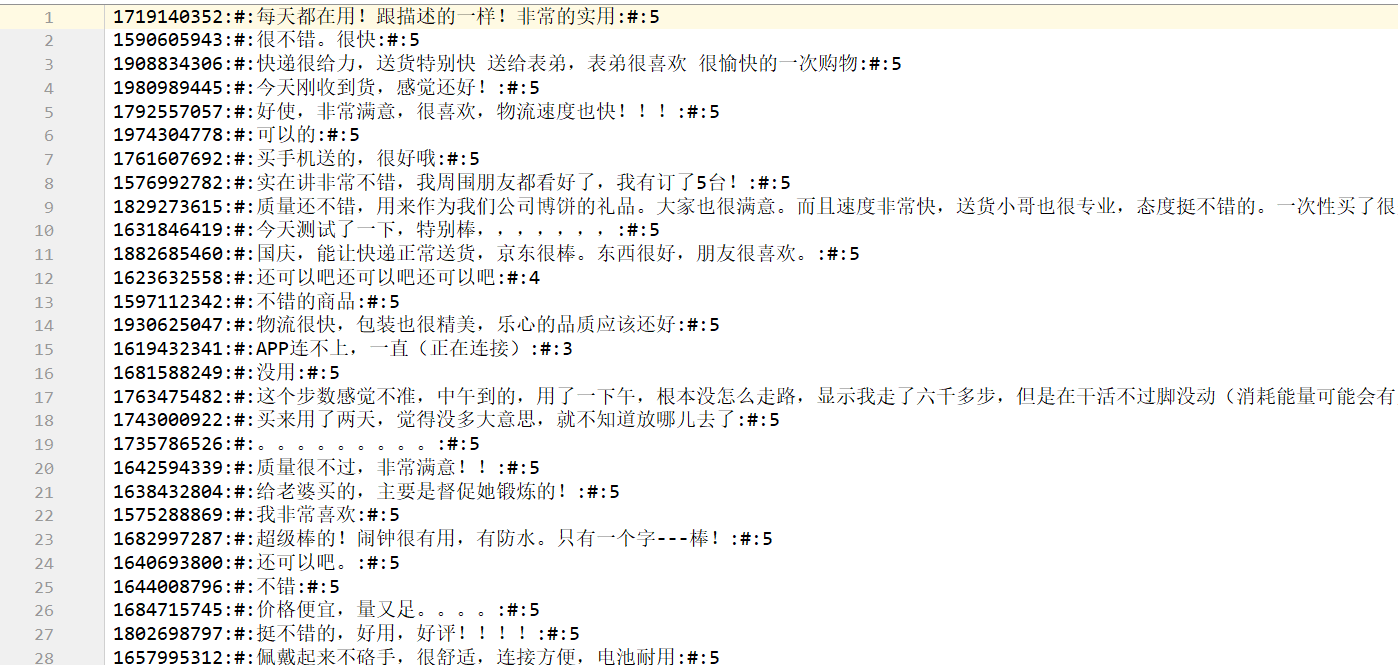

电商评论数据:

代码实现

1 | ''' |

电商平台中有海量的非结构化文本数据,如商品描述、用户评论、用户搜索词、用户咨询等。这些文本数据不仅反映了产品特性,也蕴含了用户的需求以及使用反馈。通过深度挖掘,可以精细化定位产品与服务的不足。

用户评论能反映出用户对商品、服务的关注点和不满意点。评论从情感分析上可以分为正面与负面。细粒度上也可以将负面评论按照业务环节进行分类,便于定位哪个环节需要不断优化。

分词词典:

电商评论数据:

1 | ''' |